What is Keyword Density?

Keyword Density is the percentage of times a keyword, or key phrase, appears on a page compared to the total number of words. It’s calculated by taking the number of times a keyword appears (keyword frequency) on the page divided by the total number of words on that page and presented as a percentage.

Example

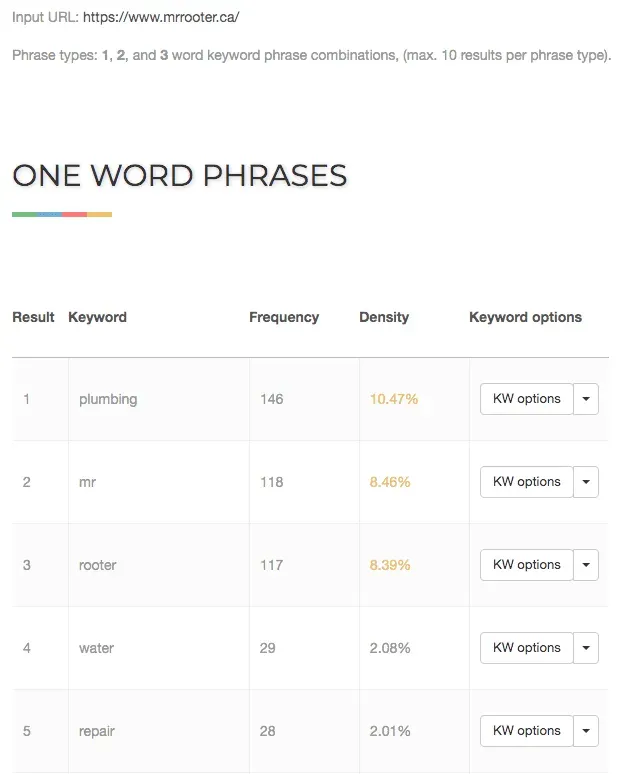

To illustrate this we will be using an online checker (https://www.seoreviewtools.com/keyword-density-checker/) to scan https://www.mrrooter.ca/. The keyword density checker from SEO Review tools is a free tool that will automatically scan the page, counts the number of times a keyword is used, and calculates the keyword density for us.

The image below shows the keyword density of the home page as of February 2, 2021.

History

Keyword density was a major factor in determining how relevant or irrelevant a page was to a topic in the early days of SEO. It was also used as a factor in determining if a page may be considered spammy, if the density was intentionally high and the text readability was considered unnatural.

Since early search engines relied on keyword matching to determine the topic of the page, having lots of keywords on the page made sense at the time. Most SEOs aimed to have a density somewhere between 1-5%. This practice helped get better results in search engines, especially if your content was valued by the audience, relevant to the search query, and popular.

Unfortunately, a strong focus on keywords created some challenges and led to keyword stuffing and other frowned upon practices in SEO, so Google started tweaking their algorithm.

Google started to enhancing its focus on content quality back in 2011 with the Panda update, but this was only the start.

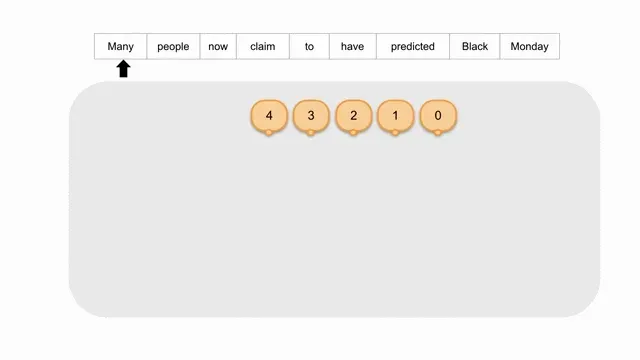

The Hummingbird update in 2013 was another major step for search engines to better understand content and context.

In 2017, Google launched SLING, an experimental system for parsing natural language text directly into a representation of its meaning.

It’s really interesting technology, but very technical and complex to understand. SLING essentially trains a recurrent neural network by optimizing for the semantic frames of interest.

Then Google had a breakthrough with bidirectional transformers.

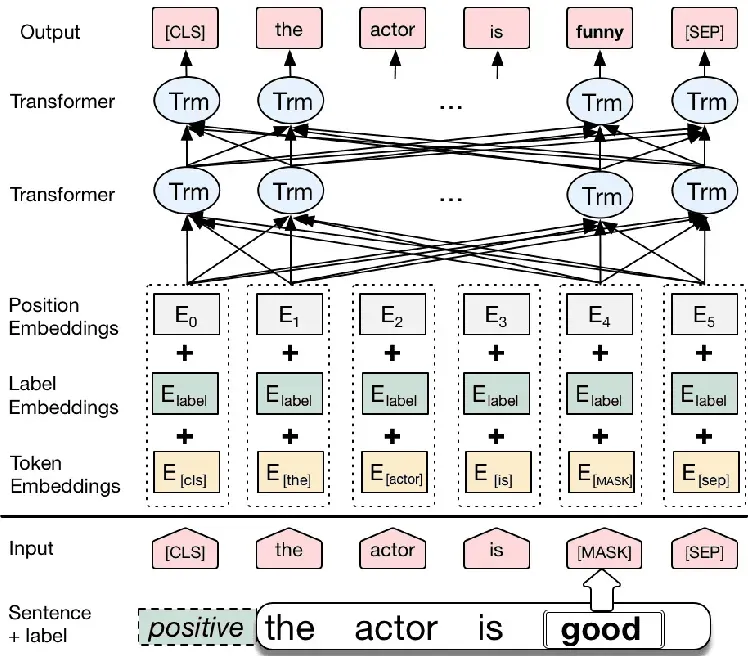

In 2019, the introduction of the BERT (Bidirectional Encoder Representations from Transformers) algorithm which is built off of Natural Language Understanding and Artificial Intelligence is one of the most significant technical updates we have seen in search!

BERT is a pertained and trainable AI that processes words in relation to all the other words in a sentence, rather than one-by-one in order. The details about BERT and working transformers are described in this paper by Google.

Today, BERT helps Google better understand topics and concepts in sentences, paragraphs and search queries, without relying on keyword density.

FAQ

Is keyword density important today?

Senior Webmaster Trends Analyst at Google, John Mueller, explains how Google handles keyword density and stuffing.

Our systems are built to ignore keyword stuffing. Sites can still rank despite using keyword stuffing. Many sites follow old, outdated, bad advice, but are still reasonable sites for users, and search.

— 🍌 John 🍌 (@JohnMu) October 20, 2020

In fact, it was confirmed by Google that their search engines were already moving away from keyword density as early as 2014.

Google has said so, too:

“Keyword density, in general, is something I wouldn’t focus on… Search engines have kind of moved on from there over the years… They’re not going to be swayed if someone has the same keyword on their page 20 times.” John Mueller, Google

Here’s the Google webmaster recording from 2014.

What is the ideal keyword density number?

An optimal keyword density is an SEO myth.

Are keywords still important in SEO?

Yes, SEOs still care about keywords, but not the same way that they used to. Modern SEOs focus on creating content with the right topics that is unique, valuable, and relevant.

I read a blog post saying that density is a top ranking factor?

There’s a lot of content about SEO out there! Some of it’s outdated and not refreshed, some of is inaccurate, and some is very helpful.

Is having a keyword density that’s too high bad for SEO?

Check out this page explaining keyword stuffing.